In the past, cryptography was largely an ad hoc discipline. Cryptographers invented involved mechanisms to encrypt messages, and deployed them in the field. Then, cryptanalysts tried to break those algorithms... and eventually succeeded. So cryptographers had to go back to the drawing board and come up with new mechanisms... lather, rinse, repeat. In the last couple of decades, cryptography evolved from what was largely an art into a craft, and then into a rigorous scientific discipline[FOC1, FOC2, KL15].

Real Programmers don't write specs:

users should consider themselves lucky

to get any programs at all, and take what they get.

-- Tom van Vleck.

Once upon a time, programmers wrote their own sorting subroutines and were lucky if their applications didn't spectacularly crash. Then, they wised-up (a little) and put their sorting routines into libraries, for widespread re-use. Overall bug count went down, dramatically, and everyone was happy... until they realized that quicksort() behaved strangely in some dark corner cases. So, after a lot of head scratching, back to Knuth[K98] they went, and lo and behold! they noticed that they didn't properly document the required properties for the data sets and comparison operators used by that routine. Application writers foolishly made some assumptions when using quicksort() that the library writers never expected. No wonder that those applications crashed: they ventured into the dreaded no-man's land of undefined behavior. Meanwhile, everyone uses very-well documented, stable and mature standard libraries, expecting no less than total reliabiliy. When debugging application code, programmers won't start digging into the library or compiler source code: they can expect the bugs to be of their own making. They trust their toolchain[T84] and libraries to build reliable programs. Programming evolved from an art into craft / engineering discipline.

A similar evolution took place in the field of cryptography. What started as an art and craft, evolved into a solid scientific discipline. Previously, only privileged people working for princes, generals, diplomats[K67], and well-funded spying agencies knew how to create and break crypto algorithms, and doing so was mostly in a very ad hoc, intuitive manner. When IBM released the DES algorithm, there was a lot of insecurity about the design of the S-boxes (and there still is[CCG03]). Could the NSA break DES with the help of some secret weakness in the S-boxes? Contrast this with AES (Rijndael). DES's successor uses S-boxes too, but their design is fully documented and justified. Cryptography now uses well-built blocks, that can be used with confidence, just like the functions in a Standard Library. And not just code blocks: just like programmers identified software patterns[GHJV95] in code and took advantage of this important insight, cryptographers identified cryptographic primitives, and started combining them in creative ways, leading to an explosion in the number of cryptographic constructions, protocols etc.

But the evolution of cryptography from ad hoc art didn't stop at the craft / engineering stage. Solid blocks of code and cryptographic primitives is one thing. Combining them into constructions, and making sure that those constructions were secure is an entirely different matter[FOC1, 1.4 Motivation to the Rigorous Treatment, pages 21ff]. Even the best documentation for cryptographic primitives can't prevent even the most experienced cryptographic designer from unwittingly concocting a totally insecure construction, just like the most solid construction materials won't prevent architects from building structures that can collapse at the worst possible moment:

A classic example in cryptography is authenticated encryption (AE): we want to encrypt the data to provide encryption, and we want to MAC the data to ensure integrity. But which construction should we use: MAC-then-Encrypt (MtE), Encrypt-then-MAC (EtM), or Encrypt-and-MAC (EaM)? It turns out that not all constructions are secure[K01] (in a sense to be defined in a moment) [BS, Chapter 9. Authenticated Encryption, pages 347ff].

To build secure constructions, one has to prove that they are indeed secure. But what does that mean to prove security of a construction? And, while we're at it: what does "secure" mean? That's where it starts to get interesting, and this is probably the point where cryptography as science was born: one has to define "secure" and only with a precise definition of the intended property can a construction be proven to meet that property.

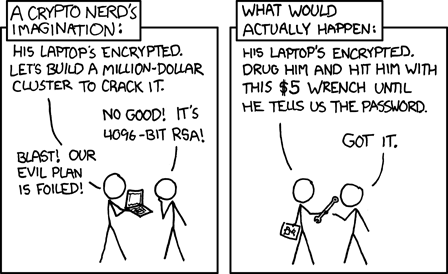

Defining "secure" is surprisingly non-trivial and all but obvious. It all depends on the threat model, i.e. on the powers that we are willing to concede to an attacker. E.g.: is the attacker a passive eavesdropper, or can she insert, modify, re-route, delete, duplicate, re-order, or replay messages in-transit? Does she have the ability to conduct a chosen plaintext attack, or even a chosen ciphertext attack? What kind of computational power could she harness at most if she were a state actor?

Just like there ain't no right or wrong definition of "secure" (after all, definitions are per definitionem always true), there ain't no one-fits-all definition of "secure". Why is that so? That's because the definition of a threat model is basically the interface between theory and practice, between our mathematical models and the real world. Coming up with a sensible theat model is difficult, and to err on the side of caution, cryptographers almost always concede more powers to the adversary than what she could ever hope to apply in the real world. To wit: assuming that an adversary can execute all polynomial-time computations, but no exponential-time computations, is unrealistic in practice as, depending on the exponent, even polynomial computations could take more time and space than the whole universe (unless she happens to have access to Deep Thought or an oracle), but is still a useful, widely used conservative assumption that gives a lot of safety margin.

“O Deep Thought computer," he said,

"the task we have designed you to perform is this.

We want you to tell us...." he paused, "The Answer."

"The Answer?" said Deep Thought. "The Answer to what?"

"Life!" urged Fook.

"The Universe!" said Lunkwill.

"Everything!" they said in chorus.

Deep Thought paused for a moment's reflection.

"Tricky," he said finally.

"But can you do it?"

Again, a significant pause.

"Yes," said Deep Thought, "I can do it."

"There is an answer?" said Fook with breathless excitement.

"Yes," said Deep Thought.

"Life, the Universe, and Everything.

There is an answer.

But, I'll have to think about it."

-- Douglas Adams: The Hitchhiker's Guide to the Galaxy

To the naive reader, modern cryptography may have lost much of its appeal and charm: what's the fun of tinkering with cryptographic building blocks, while being required to come up with proofs every step of the way? Isn't that tedious? Isn't that boring? Keeping in mind that lives and our whole civilization literaly depend on the reliability of cryptographic constructions, isn't it too big a sacrifice to spend a little time and effort up-front? The rewards are immense and have worldwide impact. You won't want your local nuclear power plant's core components, your highly sensitive pieces of industrial machinery, or your high-tech health-care equipment at the hospital to be controlled by some poorly designed buggy (or sabotaged) software. Why would you settle for less when it comes to cryptography? To the newcomer, it takes some time to get used to modern cryptography. But it soon becomes second nature, and then the real fun begins.

Literature

- [FOC1] Oded Goldreich: Foundations of Cryptography, Volume 1: Basic Tools

- [FOC2] Oded Goldreich: Foundations of Cryptography, Volume 2: Basic Applications

- [KL15] Jonathan Katz, Yehuda Lindell: Introduction to Modern Cryptography, 2nd Edition. (review).

- [K98] Donald Knuth: The Art of Computer Programming, Vol 3. Sorting and Searching.

- [T84] Ken Thompson: Reflections on Trusting Trust. Communications of the ACM, Volume 27 Issue 8, Aug 1984, pages 761-763 (acm.org with PDF, another source)

- [K67] David Kahn: The Codebreakers: The Story of Secret Writing. (review).

- [CCG03] Courtois, Castagnos, Goubin: What do DES S-boxes Say to Each Other?

- [GHJV95] Gamma, Helm, Johnson, Vlissides: Design Patterns: Elements of Reusable Object-Oriented Software.

- [BS] Dan Boneh, Victor Shoup: A Graduate Course in Applied Cryptography (draft 0.4).

- [K01] Hugo Krawczyk: The order of encryption and authentication for protecting communications (Or: how secure is SSL?) (iacr.org 2001/045, postscript)